Wonbong Jang / Won

Hello! My name is Wonbong — but you can also call me Won.

I completed my PhD at University College London, supervised by

Prof. Lourdes Agapito,

and currently work as a Postdoctoral Researcher at Meta on the Monetization + GenAI team in London.

Email / Google Scholar / Twitter / LinkedIn / Threads |

|

Papers

|

Kaleido: Scaling Sequence-to-Sequence Generative Neural Rendering Shikun Liu, Kam-Woh Ng, Wonbong Jang, Jiadong Guo, Junlin Han, Haozhe Liu, Yiannis Douratsos, Juan C. Perez, Zijian Zhou, Chi Phung, Tao Xiang and Juan-Manuel Perez-Rua ICLR, 2026 Project Page / arXiv Kaleido pushes the idea of "3D perception is not a geometric problem, but a form of visual common sense" to novel view synthesis problem. It generates beautiful renderings and achieves per-scene optimization model with much fewer number of input images. |

|

|

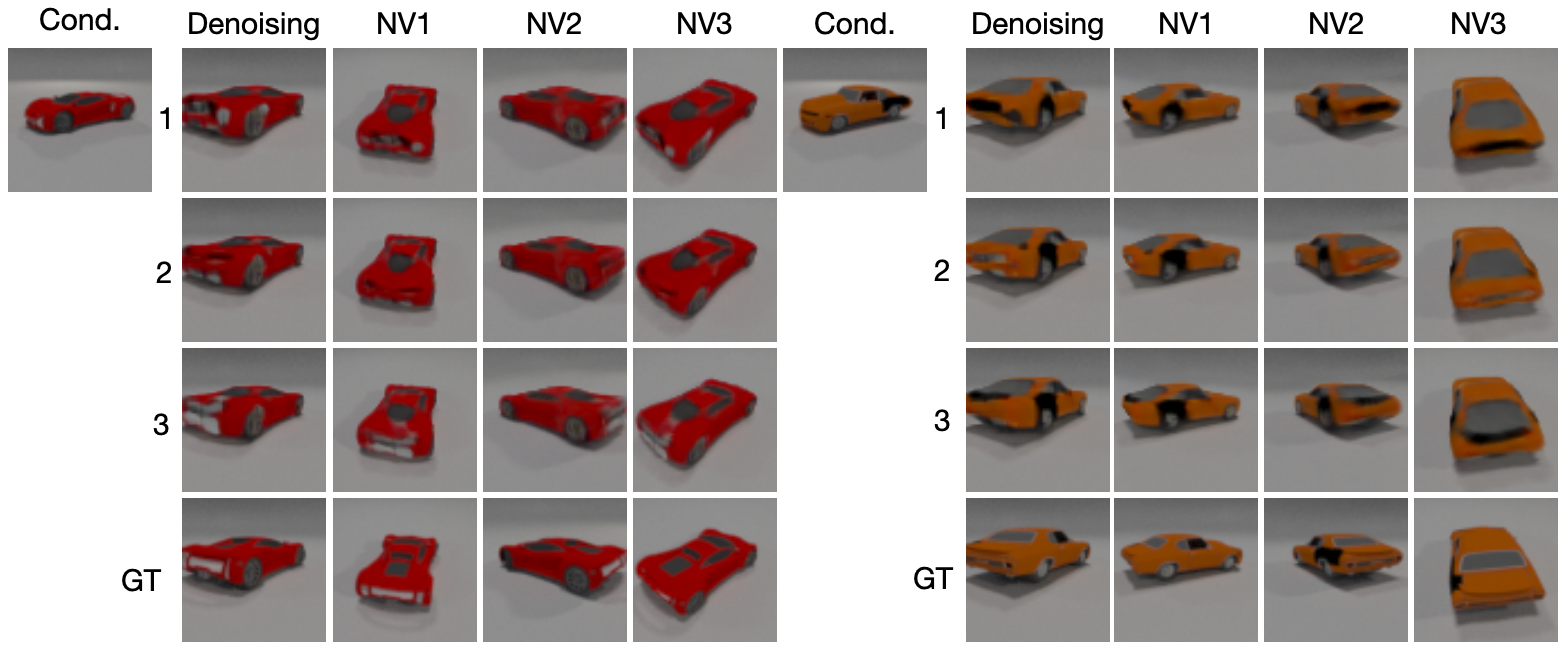

DT-NVS: Diffusion Transformers for Novel View Synthesis Wonbong Jang, Jonathan Tremblay, Lourdes Agapito arxiv, 2025 Arxiv DT-NVS extends NViST by leveraging diffusion models, an approach well-suited for the inherently probabilistic nature of novel view synthesis in occluded regions. The method maintains consistency with the input image while generating diverse outputs for these unseen parts, as shown in the teaser figure with an occluded car. This research was completed during the final stages of my PhD studies and is available on arXiv. |

|

|

Pow3R: Empowering Unconstrained 3D Reconstruction with Camera and Scene Priors Wonbong Jang, Philippe Weinzaepfel, Vincent Leroy, Lourdes Agapito, Jerome Revaud CVPR, 2025 Project Webpage / Paper / Arxiv / Poster PDF / Code DUSt3R generates 3D pointmaps from regular images without requiring camera poses. In practice, significant effort is put into camera calibration or deploying additional sensors to acquire point clouds. We present Pow3R, a single network capable of processing any subset of this auxiliary information. By incorporating priors, our method achieves more accurate and precise 3D reconstructions, multi-view depth estimation, and camera pose predictions. This approach opens new possibilities, such as processing images at native resolution and performing depth completion. Additionally, Pow3R generates pointmaps in two distinct coordinate systems, enabling the model to compute camera poses more quickly and accurately. |

|

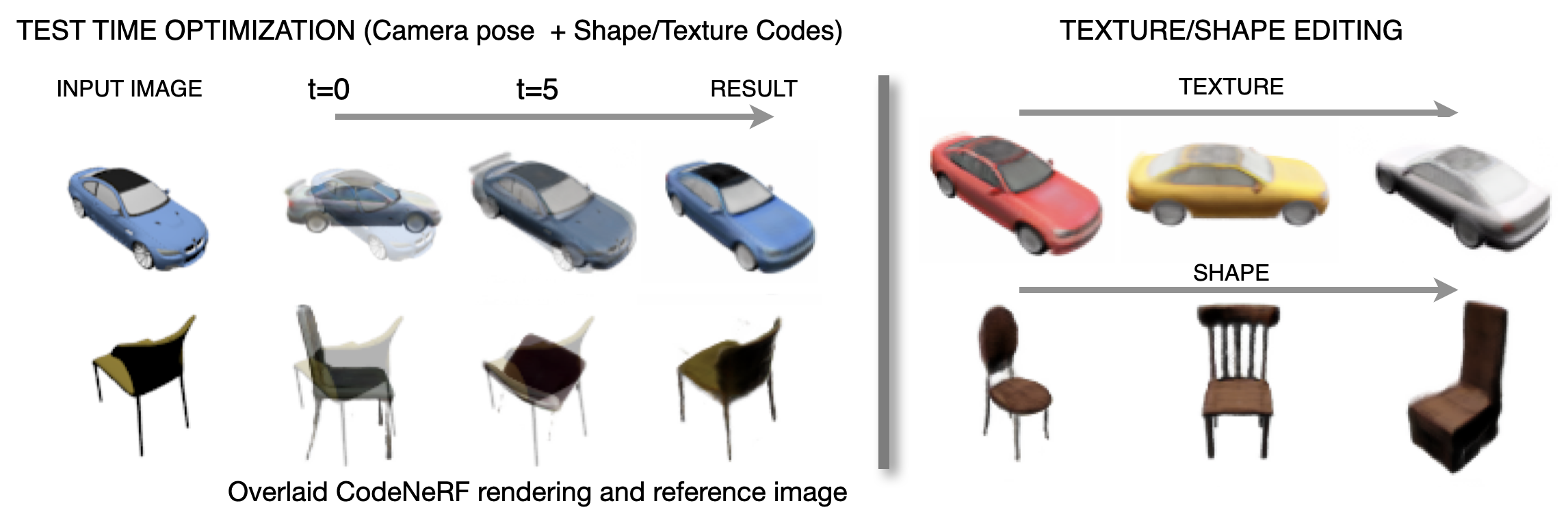

NViST: In the Wild New View Synthesis from a Single Image with Transformers Wonbong Jang, Lourdes Agapito CVPR, 2024 Project Page / arXiv / Code / Paper Poster PDF NViST turns in-the-wild single images into implicit 3D functions with a single pass using Transformers. Extending CodeNeRF to multiple real-world scenes, Feed-forward model, Transformers. |

|

|

CodeNeRF: Disentangled Neural Radiance Fields for Object Categories Wonbong Jang, Lourdes Agapito ICCV, 2021 Project Page / arXiv / Code Disentangled NeRF, Conditional NeRF, Generalizing NeRF. |

Books and Movies

The below list contains some books and movies that I like and currently read/watch:

- What is Life?

- First You Write a Sentence

- What I Talk When I Talk About Running

- Algorithms to Live By

- Man's Search for Meaning

- Why Greatness Cannot Be Planned

- The Newsroom (Season 1)

This website's template is based on Jon Barron's website.